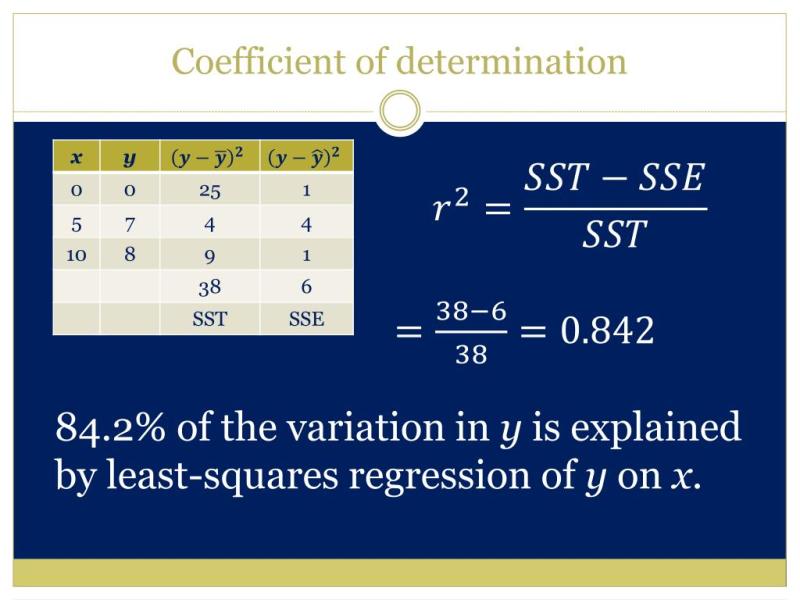

What does the coefficient of determination mean?

The coefficient of determination, often denoted as R-squared (R²), is a statistical measure that indicates the proportion of the variance in the dependent variable that is predictable from the independent variables in a regression analysis. In other words, it tells you how well the independent variables in a regression model explain the variability in the dependent variable. Here's what the coefficient of determination means:

R-squared = 0: When the R-squared value is 0, it means that none of the variance in the dependent variable is explained by the independent variables in the model. The regression model provides no useful information for predicting the dependent variable's values. It's essentially a poor fit, and the model doesn't capture any of the data's variation.

R-squared = 1: An R-squared value of 1 signifies a perfect fit. In this case, the regression model accurately predicts all of the variance in the dependent variable, and every data point falls exactly on the regression line. This rarely occurs in practice and is often a sign of overfitting, where the model is too complex for the data.

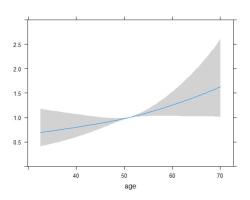

R-squared between 0 and 1: This is the typical scenario. The closer the R-squared value is to 1, the better the model explains the variance in the dependent variable. For example, an R-squared of 0.80 means that the model explains 80% of the variance in the dependent variable, while the remaining 20% is unexplained or due to other factors. The interpretation of R-squared in this range depends on the context of the analysis.

0 < R-squared < 1: In practice, it's common to have R-squared values that fall between 0 and 1. The closer the R-squared is to 1, the more effectively the model explains the variability in the dependent variable. However, a high R-squared does not necessarily mean that the model is the best fit for the data, and other factors, such as model complexity and the context of the analysis, must be considered.

It's important to note that R-squared should be interpreted in conjunction with other statistical metrics, and it's crucial to consider the context and purpose of the analysis. A high R-squared does not guarantee that the model is a good fit for all scenarios, and a low R-squared does not necessarily mean the model is useless. It's just one tool among many for assessing the performance of a regression model.

Coefficient of Determination: Understanding Its Meaning

In the realm of statistics, the coefficient of determination, denoted by R², is a crucial metric that quantifies the proportion of the variance in a dependent variable that can be explained or predicted by an independent variable or set of independent variables within a regression model. It essentially measures how well the independent variables account for the fluctuations observed in the dependent variable.

Interpreting the Coefficient of Determination in Statistics

The coefficient of determination, represented by a value between 0 and 1, provides a straightforward interpretation of the strength of the association between the independent and dependent variables.

R² = 0: Indicates a complete absence of correlation, meaning the independent variables have no predictive power for the dependent variable.

0 < R² < 1: Suggests a positive correlation, implying the independent variables explain some portion of the variation in the dependent variable. A higher R² value indicates a stronger correlation.

R² = 1: Represents a perfect correlation, signifying that the independent variables perfectly explain the variation in the dependent variable.

Deciphering the Significance of the Coefficient of Determination

The coefficient of determination plays a vital role in evaluating the effectiveness of a regression model. A higher R² value generally indicates a more robust model, suggesting that the independent variables effectively explain the dependent variable. However, it's essential to consider other factors alongside R², such as the statistical significance of the independent variables and the presence of outliers, to make a comprehensive assessment of the model's performance.

Applications of the Coefficient of Determination

The coefficient of determination finds widespread applications in various statistical domains, including:

Model Evaluation: Assessing the goodness of fit of regression models by quantifying the proportion of variance explained by the independent variables.

Model Comparison: Comparing the relative performance of different regression models by evaluating their respective R² values, indicating which model better explains the relationship between the variables.

Relationship Strength Assessment: Gauging the strength of the association between variables by interpreting the R² value, providing insights into the explanatory power of the independent variables.

Variable Importance Identification: Identifying the most influential independent variables in a regression model by examining their relative contributions to the overall R² value.

Prediction: Making informed predictions about the dependent variable based on the independent variables, utilizing the regression model and the coefficient of determination.

In conclusion, the coefficient of determination serves as a valuable tool for understanding and interpreting statistical relationships, making it an indispensable metric in various statistical analyses and research endeavors.