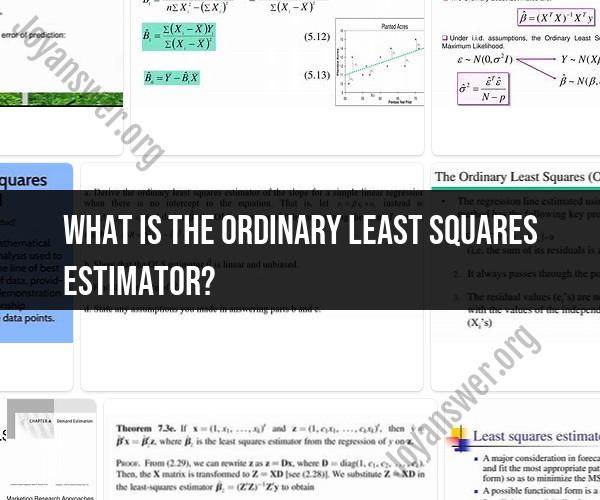

What is the ordinary least squares estimator?

The Ordinary Least Squares (OLS) estimator is a statistical technique used in regression analysis to estimate the parameters of a linear regression model. It is a method for finding the best-fitting linear relationship between a dependent variable and one or more independent variables. Here's how it works:

Linear Regression Model: In linear regression, you have a dependent variable (the one you're trying to predict) and one or more independent variables (predictors). The relationship between them is assumed to be linear, meaning you're trying to find a linear equation of the form:

Y = β₀ + β₁X₁ + β₂X₂ + ... + βₖXₖ + ε

- Y is the dependent variable.

- X₁, X₂, ..., Xₖ are the independent variables.

- β₀, β₁, β₂, ..., βₖ are the parameters you want to estimate.

- ε represents the error term, which captures the difference between the predicted and actual values.

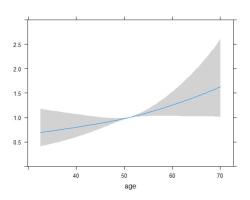

Objective: The goal of OLS is to find the values of β₀, β₁, β₂, ..., βₖ that minimize the sum of the squared differences between the observed values of the dependent variable and the values predicted by the linear equation.

Minimization: OLS finds the best-fitting line by minimizing the sum of the squared residuals (the ε terms). The residuals are the differences between the observed Y values and the values predicted by the linear equation. Squaring them ensures that both positive and negative differences are considered.

Least Squares Solution: The OLS estimator calculates the values of β₀, β₁, β₂, ..., βₖ that minimize the sum of the squared residuals. These values provide the equation for the linear model that best fits the data.

Interpretation: Once the OLS estimates are obtained, you can interpret the coefficients (β₀, β₁, β₂, ...) to understand how changes in the independent variables are associated with changes in the dependent variable.

OLS is widely used in various fields for tasks such as predictive modeling, hypothesis testing, and understanding relationships between variables. It's important to note that OLS makes certain assumptions about the data, including linearity, independence of errors, and homoscedasticity (constant variance of residuals). Violations of these assumptions can affect the reliability of OLS estimates.