What are the assumptions of logistic regression?

Logistic regression is a statistical method used for modeling the relationship between a binary dependent variable (usually coded as 0 or 1) and one or more independent variables (predictors or features). Like many statistical techniques, logistic regression relies on several key assumptions. These assumptions are important to ensure the validity and reliability of the model's results. Here are the main assumptions of logistic regression:

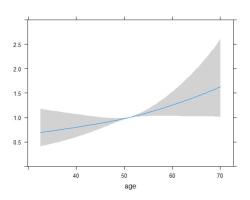

Linearity of the Logit: This assumption implies that the relationship between the log-odds of the dependent variable and the independent variables is linear. In other words, the log-odds should change in a constant and consistent manner with changes in the predictor variables. This can be checked by examining scatterplots and assessing the linearity of the log-odds.

Independence of Errors: Logistic regression assumes that the observations in your dataset are independent of each other. In other words, the value of the dependent variable for one observation should not be influenced by or correlated with the values of the dependent variable for other observations. Violations of this assumption can occur in data with temporal or spatial dependencies.

No Multicollinearity: Multicollinearity occurs when two or more independent variables in the model are highly correlated with each other. This can make it difficult to discern the individual effects of these variables on the dependent variable. It's important to check for multicollinearity and, if present, consider removing or transforming variables to mitigate its impact.

Large Sample Size: Logistic regression typically assumes a relatively large sample size to ensure that the maximum likelihood estimates of the model's parameters are stable and reliable. A rule of thumb is to have at least 10-15 cases with the least frequent outcome (e.g., cases with the outcome coded as 1) per predictor variable to obtain robust results.

No Outliers: Outliers are extreme data points that can disproportionately influence the model's coefficients. It's important to identify and handle outliers appropriately, as they can distort the parameter estimates and affect the model's performance.

Binary Dependent Variable: Logistic regression is specifically designed for modeling binary outcomes (e.g., yes/no, success/failure). While it can be extended to handle multi-class classification problems through techniques like multinomial logistic regression or one-vs-all strategies, the basic logistic regression assumes a binary outcome.

Absence of Perfect Separation: Perfect separation occurs when there is a combination of predictor variables that can perfectly predict the outcome variable for all cases in the dataset. In such cases, logistic regression may fail to converge or produce unreliable parameter estimates. Identifying and addressing perfect separation issues is crucial.

Adequate Variation in the Dependent Variable: There should be some variation in the dependent variable; otherwise, the logistic regression model may not provide meaningful insights or predictions.

It's essential to assess these assumptions when applying logistic regression to a particular dataset. Violations of these assumptions may require data preprocessing, transformation, or the use of alternative modeling techniques to obtain accurate and reliable results. Diagnostic tools like residual plots, goodness-of-fit tests, and cross-validation can help in evaluating the model's performance and checking the validity of these assumptions.

Assumptions Underlying Logistic Regression: A Deep Dive

Logistic regression is a statistical model that is used to predict the probability of a binary outcome. It is a popular model for many machine learning tasks, such as predicting whether a customer will churn, whether a patient has a disease, or whether a loan will be defaulted on.

Logistic regression is based on a number of assumptions, which must be met in order for the model to be reliable. These assumptions are:

- Binary outcome variable: The dependent variable in logistic regression must be binary, meaning that it can only take on two values (e.g., yes/no, true/false, 1/0).

- Linearity in the logit: The logit of the dependent variable must be linear in the independent variables. The logit is the natural logarithm of the odds of the outcome, and it is calculated as follows:

Logit(p) = ln(p / (1 - p))

where p is the probability of the outcome.

- Independence of observations: The observations in the dataset must be independent of each other. This means that the outcome for one observation should not be influenced by the outcomes of the other observations.

- No multicollinearity: The independent variables in the dataset should not be highly correlated with each other. Multicollinearity can cause the model to be unstable and produce inaccurate results.

- Sufficiently large sample size: Logistic regression models require a sufficiently large sample size in order to be reliable. A general guideline is that you need at least 10 cases with the least frequent outcome for each independent variable in your model.

Checking the Foundations: Understanding and Testing Logistic Regression Assumptions

There are a number of ways to check whether your data meets the assumptions of logistic regression. Some of the most common methods include:

- Binary outcome variable: This can be checked by simply looking at the distribution of the dependent variable. If there are more than two unique values in the variable, then it is not binary.

- Linearity in the logit: This can be checked by plotting the logit of the dependent variable against the independent variables. If the relationship is linear, then the assumption is met.

- Independence of observations: This can be checked by looking at the design of the study. If the observations were collected in a random and independent manner, then the assumption is likely to be met.

- No multicollinearity: This can be checked by calculating the correlation coefficients between the independent variables. If any of the correlation coefficients are greater than 0.7, then there is likely to be multicollinearity.

- Sufficiently large sample size: This can be checked by calculating the number of cases with the least frequent outcome for each independent variable in the model. If there are fewer than 10 cases for any of the independent variables, then the sample size is likely to be too small.

Logistic Regression Assumptions and Their Impact on Model Reliability

If the assumptions of logistic regression are not met, then the model may not be reliable. For example, if the outcome variable is not binary, then the model will not be able to accurately predict the probability of the outcome. Similarly, if the observations are not independent, then the model may overfit the training data and not generalize well to new data.

It is important to check the assumptions of logistic regression before using the model. If any of the assumptions are not met, then you may need to take steps to address the issue. For example, if there is multicollinearity, you may need to remove some of the independent variables from the model. If the sample size is too small, you may need to collect more data.

By checking and addressing the assumptions of logistic regression, you can ensure that your model is reliable and produces accurate predictions.