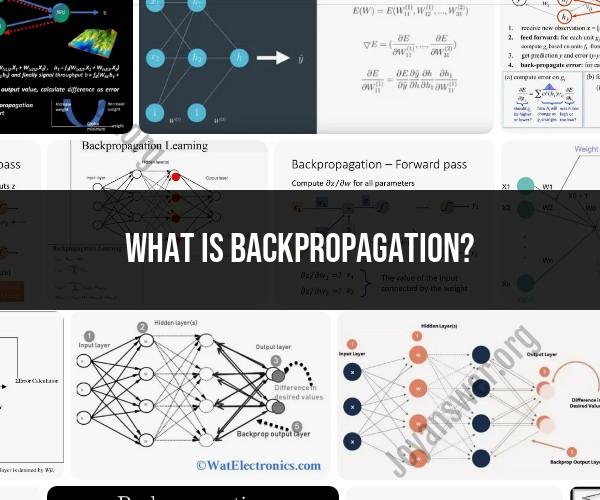

What is backpropagation?

Backpropagation, short for "backward propagation of errors," is a fundamental algorithm used in training artificial neural networks, particularly in the context of supervised learning. It's a critical part of the training process in deep learning models, such as multi-layer perceptrons and deep neural networks. Backpropagation is used to adjust the model's parameters (weights and biases) so that the network can learn to make accurate predictions or classifications.

Here's a comprehensive overview of the backpropagation process:

1. Forward Pass:

- The backpropagation process begins with the forward pass. During the forward pass, input data is fed into the neural network, and computations are performed layer by layer. This includes applying weights, biases, activation functions, and calculating the output.

2. Calculate Error:

- After the forward pass, the predicted output is compared to the actual target values (ground truth). The error or loss is calculated, which quantifies how far off the predictions are from the desired outcomes. Common loss functions include mean squared error for regression tasks and cross-entropy for classification tasks.

3. Backward Pass (Backpropagation):

- The primary objective of backpropagation is to minimize the error or loss. To achieve this, the gradients of the loss with respect to the model's parameters (weights and biases) are calculated. This involves propagating the error backward through the network.

4. Chain Rule of Calculus:

- The chain rule of calculus is used to compute the gradients efficiently. Gradients are calculated for each parameter by applying the chain rule in reverse order, starting from the output layer and moving backward through the hidden layers. The chain rule allows us to determine how changes in each parameter affect the overall error.

5. Weight and Bias Updates:

- Once the gradients are calculated, the model's parameters are updated using optimization algorithms like stochastic gradient descent (SGD), Adam, or RMSprop. These algorithms adjust the weights and biases in a way that reduces the error, helping the network learn and improve its predictions.

6. Iteration:

- Steps 1 to 5 are repeated iteratively for a specified number of epochs or until convergence. Each iteration helps the neural network learn and refine its parameters, bringing the error closer to a minimum value.

Backpropagation is a foundational concept in deep learning, enabling neural networks to learn complex patterns and representations in data. By iteratively adjusting the model's parameters to minimize the error, the network becomes capable of making accurate predictions for a wide range of tasks, including image recognition, natural language processing, and more.

It's worth noting that while backpropagation is a powerful training technique, modern deep learning libraries and frameworks provide tools and functions that handle most of the backpropagation calculations, making it more accessible for developers and researchers without needing to implement the algorithm from scratch.

Backpropagation in Neural Networks: Explanation and Process

Backpropagation is a supervised learning algorithm used to train neural networks. It works by calculating the error of the neural network's output relative to the desired output, and then propagating that error back through the network to adjust the weights of the connections between the neurons.

The backpropagation process can be broken down into the following steps:

- Forward pass: The input data is fed into the neural network, and the output of each neuron is calculated.

- Error calculation: The error of the neural network's output is calculated relative to the desired output.

- Backward pass: The error is propagated back through the network, and the weights of the connections between the neurons are adjusted.

The backpropagation process is repeated until the neural network is able to produce the desired output for the given input data.

The Role of Backpropagation in Training Neural Networks

Backpropagation plays a vital role in training neural networks. It allows neural networks to learn from their mistakes and improve their performance over time.

Without backpropagation, neural networks would not be able to learn complex tasks, such as image recognition and natural language processing.

Mathematics and Algorithms Behind Backpropagation

The mathematics behind backpropagation is based on the chain rule of calculus. The chain rule allows us to calculate the derivative of a composite function with respect to its inputs.

The backpropagation algorithm uses the chain rule to calculate the derivative of the neural network's output relative to the weights of the connections between the neurons. Once the derivatives are calculated, the weights are updated using a gradient descent algorithm.

Gradient descent is an algorithm that iteratively moves in the direction of the greatest decrease in the loss function. The loss function is a measure of the error of the neural network's output.

Applications and Advantages of Backpropagation

Backpropagation is used in a wide variety of applications, including:

- Image recognition

- Natural language processing

- Machine translation

- Speech recognition

- Medical diagnosis

- Financial forecasting

Backpropagation has several advantages over other machine learning algorithms:

- It is able to learn complex tasks.

- It is able to generalize to new data.

- It is able to learn from its mistakes.

Improving Neural Network Performance with Backpropagation

There are a number of things that can be done to improve the performance of neural networks trained using backpropagation:

- Use a good initialization scheme.

- Use a variety of training methods, such as regularized backpropagation and stochastic gradient descent.

- Use early stopping to prevent the neural network from overfitting the training data.

- Use techniques to prevent the neural network from getting stuck in local minima, such as momentum and simulated annealing.

Conclusion

Backpropagation is a powerful algorithm for training neural networks. It is used in a wide variety of applications and has several advantages over other machine learning algorithms.

By carefully considering the factors that can affect the performance of neural networks trained using backpropagation, you can improve the performance of your neural networks.