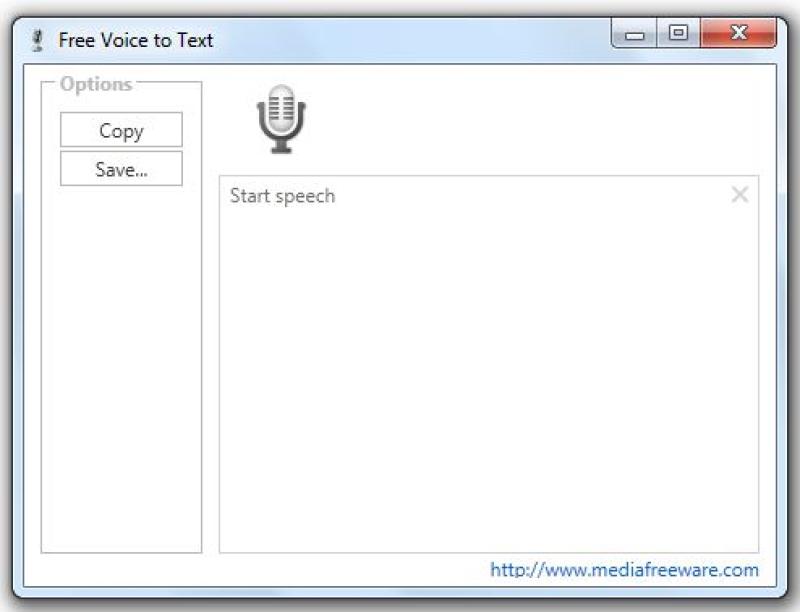

How can I write a voice to text converter?

Creating a voice-to-text converter involves several steps and technologies. Here’s a high-level guide to get you started:

1. Speech Recognition:

- Choose a Speech Recognition Engine/API: Google Cloud Speech-to-Text, IBM Watson Speech to Text, or Mozilla DeepSpeech are some options. APIs offer ready-to-use solutions, while open-source libraries may require more customization.

- Setup and Authentication: Register for an API key or account, follow their documentation for authentication, and get familiar with their usage policies.

2. Input Processing:

- Audio Input: Capture audio using a microphone or accept audio files in different formats (e.g., WAV, MP3, etc.).

- Preprocessing: Clean up the audio, remove noise, and standardize formats if needed. You might use libraries like PyDub (for Python) for this.

3. Integration and Coding:

- Code Integration: Utilize the chosen API/library in your preferred programming language (Python, JavaScript, etc.). Most APIs offer SDKs or libraries for easier integration.

- Data Transmission: Send the audio data to the API for recognition. APIs usually provide methods for sending audio data and receiving text output.

4. Handling Responses:

- Text Processing: Handle the response (recognized text) from the API. This might include handling errors, formatting the text, or performing additional processing.

- Output: Display the recognized text or save it to a file/database.

Additional Considerations:

- Error Handling: Implement strategies to handle errors gracefully, such as poor audio quality or failed recognition.

- Optimization: Optimize for performance by considering asynchronous processing, batching requests, or utilizing caching mechanisms.

- Testing: Thoroughly test your converter with various audio inputs to ensure accuracy and reliability.

- Privacy and Security: Consider data privacy and security measures, especially when dealing with sensitive audio data.

Example (Python Using Google Cloud Speech-to-Text):

Here's a simplified example using Python and Google Cloud Speech-to-Text:

from google.cloud import speech_v1p1beta1 as speech

client = speech.SpeechClient()

audio = speech.RecognitionAudio(uri="gs://your-bucket/your-audio-file.wav")

config = speech.RecognitionConfig(

encoding=speech.RecognitionConfig.AudioEncoding.LINEAR16,

language_code="en-US",

)

response = client.recognize(config=config, audio=audio)

for result in response.results:

print("Transcript: {}".format(result.alternatives[0].transcript))

This example assumes you have Google Cloud Speech-to-Text configured and an audio file stored in a Google Cloud Storage bucket.

Remember, this is a basic outline, and the complexity may vary based on your specific requirements and the chosen technologies. Additionally, staying updated with API documentation and best practices can significantly enhance the accuracy and functionality of your voice-to-text converter.

How to Build a Voice-to-Text Converter

1. Speech Acquisition

The first step in building a voice-to-text converter is to acquire the speech signal. This can be done using a microphone or by recording an audio file. The quality of the microphone is important, as a low-quality microphone will produce a noisy signal that is difficult to transcribe.

2. Feature Extraction

Once the speech signal has been acquired, it is necessary to extract features from it. These features are typically based on the frequency and time domain characteristics of the speech signal. There are a number of different features that can be extracted, including:

- Mel-frequency cepstral coefficients (MFCCs): MFCCs are a set of features that are derived from the frequency domain of the speech signal. They are a common choice for speech recognition because they are relatively robust to noise and accent.

- Linear predictive coding (LPC): LPC is a technique for representing the speech signal as a linear combination of past samples. It is a good choice for speech recognition because it can capture the temporal structure of the speech signal.

- Hidden Markov models (HMMs): HMMs are a type of statistical model that can be used to represent the probability of a sequence of events. They are a good choice for speech recognition because they can capture the probabilistic nature of speech.

3. Model Training

The next step is to train a model to recognize the features extracted from the speech signal. This model is typically a neural network, which is a type of machine learning algorithm that is well-suited for pattern recognition tasks.

The training process involves feeding the model a large dataset of speech data. The model learns to associate the features extracted from the speech signal with the corresponding words.

4. Decoding

Once the model has been trained, it can be used to decode the speech signal into text. This involves taking the features extracted from the speech signal and using them to predict the corresponding words.

5. Post-processing

The final step is to apply post-processing to the output of the decoder. This may involve correcting errors, removing duplicate words, and formatting the text.

Specific Guidelines and Software Tools

There are a number of specific guidelines and software tools that can be used to build voice-to-text converters. These guidelines and tools can help to ensure that the voice-to-text converter is accurate, efficient, and easy to use.

Some of the most important guidelines for building voice-to-text converters include:

- Use a high-quality microphone: The quality of the microphone used to record the speech signal will have a significant impact on the accuracy of the voice-to-text converter.

- Train the model on a large dataset of speech data: The more data the model is trained on, the better it will be able to recognize different voices and accents.

- Use a state-of-the-art acoustic model: The acoustic model is responsible for extracting features from the speech signal. A good acoustic model will be able to capture the most important features of the speech signal, which will improve the accuracy of the voice-to-text converter.

- Use a language model: The language model is responsible for predicting the most likely words given the features extracted from the speech signal. A good language model will be able to take into account the context of the speech, which will improve the accuracy of the voice-to-text converter.

Some of the most popular software tools for building voice-to-text converters include:

- Kaldi: Kaldi is a free and open-source toolkit for speech recognition. It is a powerful toolkit that can be used to build high-quality voice-to-text converters.

- CMU Sphinx: CMU Sphinx is another free and open-source toolkit for speech recognition. It is a well-established toolkit that is used by a number of commercial voice-to-text converters.

- Google Cloud Speech-to-Text: Google Cloud Speech-to-Text is a cloud-based service that can be used to transcribe speech into text. It is a powerful service that can be used to transcribe a wide variety of speech formats.

- Amazon Transcribe: Amazon Transcribe is another cloud-based service that can be used to transcribe speech into text. It is a powerful service that can be used to transcribe a wide variety of speech formats.

Conclusion

Building a voice-to-text converter is a complex task that requires a combination of technical skills and creativity. By following the guidelines and using the software tools listed above, you can build a voice-to-text converter that is accurate, efficient, and easy to use.