What is lexical analysis in compiler development?

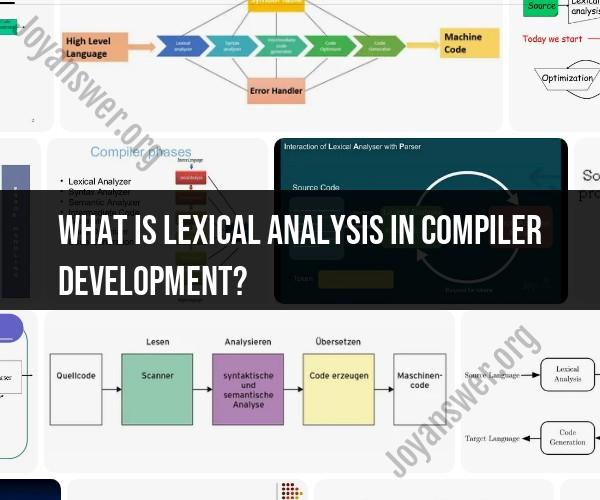

Lexical analysis, often referred to as scanning or lexing, is the first phase in the process of compiling or interpreting a programming language. It's a crucial step that involves converting the source code of a program into a sequence of meaningful tokens. These tokens are the smallest units in the source code, such as keywords, identifiers, operators, and literals, and they serve as input for the subsequent phases of the compiler. Here are some key concepts related to lexical analysis in compiler development:

Tokenization:

- Tokenization is the process of breaking down the source code into individual tokens. Each token corresponds to a particular category of language construct, and it represents a fundamental unit of syntax in the programming language.

Regular Expressions:

- Regular expressions are used to define the patterns for recognizing different tokens. These patterns specify the syntax rules for keywords, identifiers, numbers, and other language constructs.

Lexical Errors:

- During lexical analysis, the lexer identifies lexical errors, which are errors related to the structure of tokens in the source code. For example, a lexer might identify a lexical error if it encounters an invalid character or an identifier that starts with a digit.

Token Types:

- Token types are predefined categories for different language constructs. Common token types include keywords, identifiers, operators, punctuation, and literals (e.g., numbers and strings).

Whitespace and Comments:

- Lexical analyzers typically ignore whitespace and comments in the source code. These elements are not considered tokens and are usually skipped during the tokenization process.

Symbol Tables:

- A symbol table is a data structure used by the lexer to store information about identifiers and their associated attributes (e.g., data types, memory addresses). Symbol tables are essential for tracking the usage of variables and symbols in the source code.

Efficiency:

- Efficiency is an important consideration in lexical analysis. Lexical analyzers need to be designed to perform tokenization quickly and efficiently, as they are often a bottleneck in the compilation process.

Lexical Analyzers:

- Lexical analyzers, also known as lexers or scanners, are software components or programs responsible for performing lexical analysis. They read the source code character by character, apply regular expressions to recognize tokens, and produce a stream of tokens as output.

Integration with the Parser:

- The output of the lexical analysis phase is a stream of tokens that serves as input for the subsequent parsing phase of the compiler. The parser uses the tokens to build an abstract syntax tree (AST) and perform syntactic analysis.

Error Handling:

- Lexical analysis can identify and report lexical errors in the source code. Proper error handling and reporting mechanisms are essential for helping programmers understand and fix issues in their code.

In summary, lexical analysis is the initial step in the compilation process, where source code is divided into tokens and prepared for further analysis. It plays a critical role in identifying the fundamental language constructs and syntax of a program, making it easier for subsequent phases of the compiler to analyze and translate the code into executable instructions.

What is lexical analysis in the context of compiler development?

Lexical analysis is the first phase of the compilation process. It breaks the source code into a sequence of tokens, which are the basic building blocks of the programming language. Tokens can be keywords, identifiers, operators, punctuation marks, and literals.

How does lexical analysis play a role in the compilation process of programming languages?

Lexical analysis plays an important role in the compilation process by providing the compiler with a stream of tokens that can be easily parsed. The parser is the next phase of the compiler and it is responsible for analyzing the tokens and generating the abstract syntax tree (AST). The AST is a representation of the program's structure and it is used by the compiler to generate the target code.

What are the components and significance of lexical analysis in compiler design?

The main components of lexical analysis are:

- Token definition: The lexer must define the tokens of the programming language. This includes defining the regular expressions that match the tokens.

- Token recognition: The lexer must scan the source code and identify the tokens.

- Symbol table maintenance: The lexer must maintain a symbol table, which is a data structure that stores information about the tokens, such as their type and value.

Lexical analysis is significant in compiler design because it provides the compiler with a stream of tokens that can be easily parsed. This makes the parser's job much easier and allows the compiler to generate more efficient code.

Here are some additional benefits of lexical analysis:

- Lexical analysis can help to detect errors in the source code. For example, the lexer can detect if an identifier is misspelled or if an operator is used incorrectly.

- Lexical analysis can improve the performance of the compiler. By breaking the source code into a sequence of tokens, the compiler can avoid having to scan the entire source code multiple times.

- Lexical analysis can make the compiler more portable. By defining the tokens of the programming language in a separate module, the compiler can be easily ported to different platforms.

Overall, lexical analysis is an important part of compiler design and it provides a number of benefits to the compiler.