What does lexical analysis mean?

Lexical analysis, often referred to as lexing or tokenization, is the first phase of the compilation process in computer programming. It is the process of analyzing the source code of a program and breaking it down into a sequence of fundamental language elements called "tokens." These tokens are the smallest units of a programming language and include keywords, identifiers, operators, constants, and symbols.

The primary purpose of lexical analysis is to convert a stream of characters in the source code into a stream of tokens, making it easier for subsequent phases of the compiler to process and understand the code. Here's what lexical analysis involves:

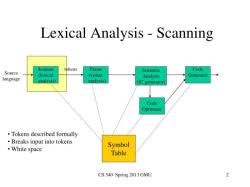

Scanning: The process starts with scanning the source code character by character. The scanner reads the input characters and groups them into sequences that form valid tokens based on the programming language's syntax.

Tokenization: As characters are read, the scanner identifies and categorizes them into different types of tokens. For example, keywords like "if" or "while," variable names like "x" or "counter," operators like "+," constants like "42" or "3.14," and symbols like parentheses or semicolons are recognized and represented as distinct tokens.

Whitespace and Comment Handling: Lexical analyzers typically discard irrelevant characters like spaces, tabs, and line breaks (whitespace) and comments, as these do not contribute to the structure of the program.

Error Detection: Lexical analysis may include error detection. It can catch lexical errors, such as misspelled keywords or unrecognized symbols, and report them to the programmer.

Token Stream Generation: The output of the lexical analysis is a stream of tokens in the order they appear in the source code. This token stream is passed on to the next phase of the compiler, the parser, which constructs the program's abstract syntax tree (AST).

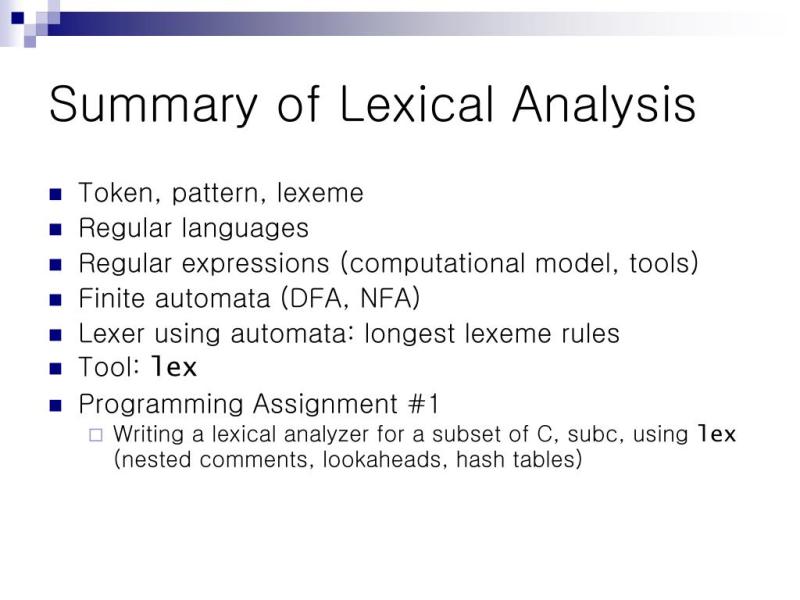

Lexical analysis is essential in programming language processing because it simplifies the task of parsing and interpreting the source code. It serves as the foundation for the subsequent phases of compilation, which include syntax analysis, semantic analysis, optimization, and code generation. The clear separation of lexical analysis from these other phases allows for a more organized and efficient compilation process.

Deciphering Lexical Analysis: Understanding the Concept

Lexical analysis, also known as tokenization or scanning, is the fundamental step in the language processing pipeline. It involves breaking down a sequence of characters into meaningful units called tokens. These tokens, which represent individual words, symbols, and operators, form the building blocks of human language and programming languages.

Lexical Analysis in Computer Science and Linguistics

In computer science, lexical analysis is a crucial component of compilers and interpreters. It transforms the input source code into a stream of tokens that the compiler can understand and process, enabling the translation of code from a high-level programming language into machine-executable instructions.

In linguistics, lexical analysis plays a central role in natural language processing (NLP). It helps computers identify and categorize words, phrases, and grammatical structures in human language, facilitating tasks such as machine translation, sentiment analysis, and text summarization.

The Role of Lexical Analysis in Language Processing

Lexical analysis serves as the foundation for various language processing tasks, including:

Tokenization: Breaking down the input text into a sequence of tokens, such as words, punctuation, and symbols.

Part-of-Speech (POS) Tagging: Assigning grammatical tags to each token, indicating its role in the sentence (e.g., noun, verb, adjective).

Morphological Analysis: Breaking down complex words into their constituent morphemes, the smallest meaningful units of language.

Stemming and Lemmatization: Reducing words to their base forms, improving the efficiency of text indexing and retrieval.

Named Entity Recognition (NER): Identifying and classifying named entities in text, such as people, places, and organizations.

Lexical analysis provides a critical layer in language processing, enabling computers to extract meaningful information from text and perform various language-related tasks.