How do you create a lexical analyzer?

Creating a lexical analyzer, also known as a lexer or scanner, is a fundamental step in developing a compiler or interpreter for a programming language. The role of a lexical analyzer is to break down the source code into a sequence of tokens, which are the smallest meaningful units of the language, such as keywords, identifiers, operators, and literals. Here's a beginner's guide to creating a lexical analyzer:

Understand the Language Grammar:

Before you begin, you must have a solid understanding of the grammar and syntax rules of the programming language for which you are building the lexical analyzer. This includes recognizing keywords, operators, identifiers, data types, and other language constructs.

Choose a Programming Language:

Select a programming language for implementing your lexical analyzer. Common choices include Python, C++, Java, and others. The choice depends on your familiarity and the specific requirements of your project.

Define Token Types:

Create a list of token types specific to your programming language. Each token type corresponds to a particular category of language construct. For example, in a C-like language, you might have token types for keywords, identifiers, numeric literals, and symbols.

Implement Regular Expressions:

Regular expressions are patterns that match specific text sequences. Create regular expressions to match the different token types in your language. Each regular expression should describe the syntax of a token. For instance, a regular expression for identifiers might be

[a-zA-Z_][a-zA-Z0-9_]*to match valid variable names.Tokenization:

Write code to tokenize the source code. This involves scanning the source code character by character and matching it against the regular expressions for the various token types. As you match characters, you build up tokens.

Token Objects:

Create data structures or objects to represent tokens. These structures should store the token type and the actual text of the token. For instance, you might have a

Tokenclass with attributes for type and value.Error Handling:

Implement error handling to deal with syntax errors or invalid tokens in the source code. You should specify how the lexical analyzer should react to such errors.

Testing:

Test your lexical analyzer on a variety of source code samples to ensure it correctly identifies and tokenizes the input. Make sure it can handle different scenarios, including valid and invalid code.

Integration:

Integrate the lexical analyzer with the rest of your compiler or interpreter. This typically involves passing the tokens to the parser or other components for further processing.

Optimization:

Depending on your project's requirements, you may need to optimize the performance of the lexical analyzer. This can involve techniques like minimizing the use of regular expressions or implementing token buffering.

Documentation:

Document your code thoroughly, describing the token types, regular expressions, and how the lexical analyzer fits into the larger project.

Maintenance:

Maintain and update your lexical analyzer as needed when you add new language features or make improvements to the compiler or interpreter.

Building a lexical analyzer is a significant step in the development of a compiler or interpreter, and it requires a good understanding of both the programming language's syntax and regular expressions. Be prepared for some debugging and testing as you refine your lexer to ensure accurate tokenization of source code.

How to create a lexical analyzer for processing programming language source code?

To create a lexical analyzer for processing programming language source code, you can follow these steps:

- Define the tokens of the programming language. Tokens are the basic building blocks of a programming language, such as keywords, identifiers, operators, and punctuation marks.

- Create a regular expression for each token. Regular expressions are patterns that can be used to match strings of characters.

- Write a program to scan the source code and identify the tokens. The program should use the regular expressions to match the tokens in the source code.

- Build a symbol table. The symbol table is a data structure that stores information about the tokens, such as their type and value.

What are the steps and techniques involved in developing a lexical analysis tool?

The following are some steps and techniques involved in developing a lexical analysis tool:

- Design the lexical analyzer. This involves defining the tokens of the programming language and creating a regular expression for each token.

- Implement the lexical analyzer. This involves writing a program to scan the source code and identify the tokens.

- Test the lexical analyzer. This involves testing the lexical analyzer with a variety of source code to ensure that it can correctly identify the tokens.

- Deploy the lexical analyzer. This involves making the lexical analyzer available to other developers.

How to implement a lexical analyzer for various programming languages?

To implement a lexical analyzer for various programming languages, you can use the following steps:

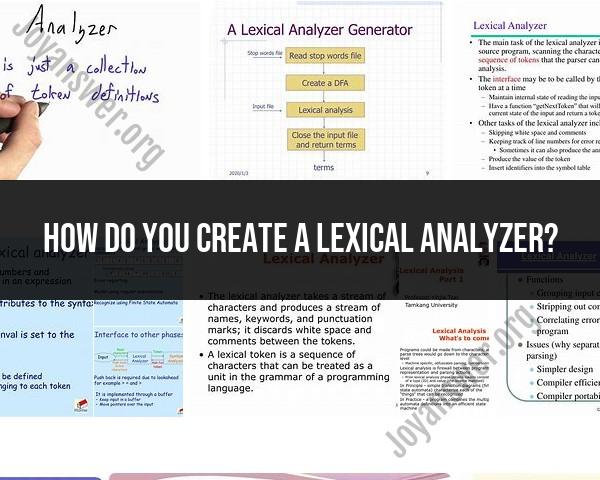

- Create a lexer generator. A lexer generator is a tool that can generate a lexical analyzer from a set of regular expressions.

- Write a lexer specification. The lexer specification is a set of regular expressions that define the tokens of the programming language.

- Generate the lexical analyzer. Use the lexer generator to generate a lexical analyzer from the lexer specification.

Additional tips for developing a lexical analyzer

- Use a lexer generator to simplify the development process.

- Write a lexer specification that is complete and unambiguous.

- Test the lexical analyzer with a variety of source code to ensure that it can correctly identify the tokens.