What are the different tasks of lexical analysis?

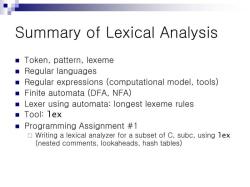

Lexical analysis, also known as lexing, is the first phase of compiling a program and involves breaking the source code into tokens. Tokens are the smallest units of meaning in a programming language. Various tasks are performed during lexical analysis to process the source code efficiently. Here are some key tasks of lexical analysis:

Tokenization:

- The primary task of lexical analysis is to break the source code into tokens. Tokens are the fundamental building blocks of a program and include keywords, identifiers, literals, operators, and punctuation symbols.

Removing Whitespace and Comments:

- Whitespace characters (such as spaces, tabs, and line breaks) and comments are typically irrelevant to the execution of a program. Lexical analysis involves removing these elements to simplify the subsequent stages of the compilation process.

Identifying Keywords:

- Keywords are reserved words in a programming language that have a specific meaning. Lexical analysis identifies and categorizes keywords, which are used for control structures, variable declarations, and other language-specific features.

Processing Identifiers:

- Identifiers are user-defined names for variables, functions, classes, etc. Lexical analysis validates and processes identifiers, ensuring they adhere to the language's naming conventions.

Handling Literals:

- Literals represent constant values in a program, such as numeric constants, string literals, or boolean values. Lexical analysis identifies and processes these literals, converting them into appropriate internal representations.

Recognizing Operators:

- Operators are symbols that perform operations on variables and values. Lexical analysis identifies and classifies operators, distinguishing between arithmetic, logical, and relational operators.

Dealing with Punctuation Symbols:

- Punctuation symbols, such as parentheses, commas, semicolons, and braces, play a crucial role in defining the structure of the code. Lexical analysis recognizes and processes these symbols.

Error Handling:

- Lexical analysis includes error handling mechanisms to detect and report lexical errors. This involves recognizing invalid tokens or patterns in the source code.

Generating Symbol Table:

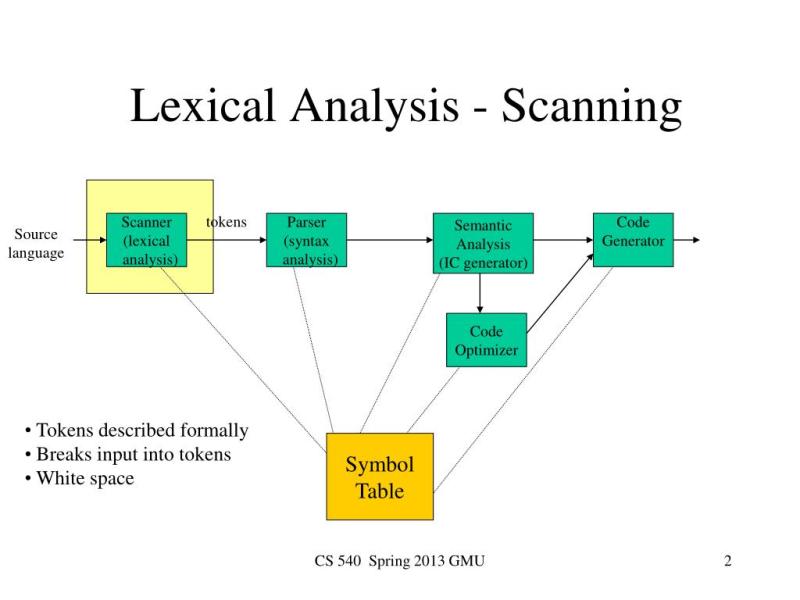

- A symbol table is a data structure that keeps track of information about identifiers, such as their names, types, and memory locations. Lexical analysis contributes to the generation of the symbol table.

Line and Column Number Tracking:

- Lexical analysis often involves keeping track of line and column numbers in the source code. This information is useful for generating meaningful error messages and aiding in debugging.

Preprocessing Directives (in some languages):

- In languages with preprocessing capabilities, such as C and C++, lexical analysis may involve processing preprocessor directives that modify the source code before compilation.

Overall, lexical analysis is a critical step in the compilation process, providing a structured and tokenized representation of the source code for further processing by subsequent phases of the compiler.

What are the different tasks involved in lexical analysis?

Lexical analysis is the first phase of a compiler or interpreter. It breaks down a stream of characters into a sequence of tokens, which are the basic building blocks of a program. The following are some of the tasks involved in lexical analysis:

- Tokenization: This involves identifying and grouping characters into tokens. For example, the string "int a = 10;" could be tokenized as follows:

"int", "a", "=", "10", ";"

- Symbol table management: The symbol table is a data structure that stores information about the tokens in a program. For example, the symbol table for the above program would contain entries for the following identifiers:

int, a, 10

- Error detection and reporting: Lexical analysis can also be used to detect and report errors in a program. For example, if the compiler encounters an unrecognized character, it can report a lexical error.

How is lexical analysis applied in programming languages and natural language processing?

Lexical analysis is an essential part of any programming language compiler or interpreter. It is also used in natural language processing (NLP) applications, such as machine translation and speech recognition.

In NLP applications, lexical analysis is used to identify and classify words in a text. For example, a lexical analyzer for a machine translation system might identify the following tokens in the English sentence "I love cats":

"I", "love", "cats"

The lexical analyzer could then classify these tokens as follows:

"I" - pronoun

"love" - verb

"cats" - noun

This information could then be used by the machine translation system to translate the sentence into another language.

Are there specific challenges or considerations associated with lexical analysis tasks?

One of the challenges of lexical analysis is dealing with ambiguity. For example, the string "123" could be interpreted as a decimal number, a hexadecimal number, or an octal number. The lexical analyzer must use additional information, such as the surrounding context, to determine the correct interpretation.

Another challenge of lexical analysis is dealing with errors. If the compiler encounters an unrecognized character, it must decide whether to ignore the error or report it to the user. In some cases, the compiler may be able to recover from the error and continue compiling the program. However, in other cases, the error may be fatal and the compiler may have to terminate.

Here are some additional considerations associated with lexical analysis tasks:

- Efficiency: Lexical analysis is often the innermost loop in a compiler. Therefore, it is important to design efficient lexical analyzers.

- Portability: Lexical analyzers should be portable, meaning that they should be able to run on different platforms. This can be challenging, as different platforms have different character encodings and other conventions.

- Maintainability: Lexical analyzers should be maintainable, meaning that they should be easy to update and fix bugs in. This can be challenging, as lexical analyzers can be complex and difficult to understand.

Overall, lexical analysis is an important and challenging task in compiler design and natural language processing.