What is the lexical analyzer?

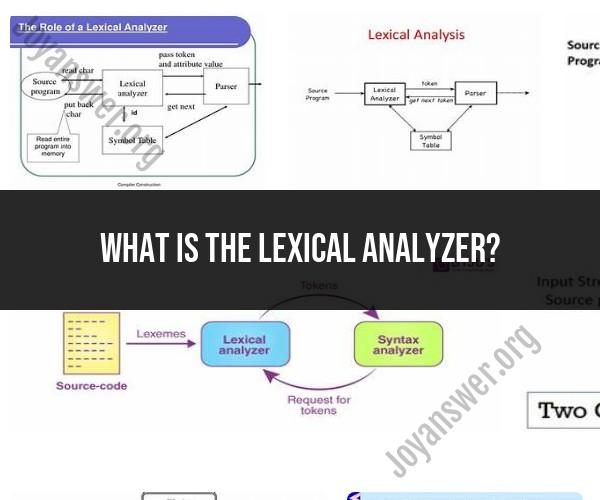

A lexical analyzer, often referred to as a lexer or tokenizer, is an essential component of a compiler or interpreter. Its primary function is to analyze the source code of a program and break it down into a sequence of smaller units called tokens. These tokens represent the fundamental building blocks of a programming language and serve as input for subsequent phases of the compilation or interpretation process. Here are key concepts related to the lexical analyzer:

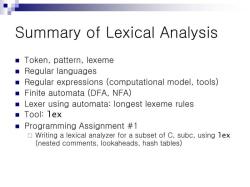

Tokenization: The process of dividing the source code into tokens is known as tokenization. Tokens are typically categorized into different types, such as keywords, identifiers, literals (e.g., numbers and strings), operators, and punctuation.

Regular Expressions: Lexical analyzers use regular expressions to define the patterns that represent different types of tokens. Each regular expression corresponds to a specific token type (e.g., a regular expression for identifying numbers).

Lexical Rules: Lexical analyzers follow a set of lexical rules that define how characters in the source code are combined to form tokens. For example, a common rule might specify that an identifier can consist of letters, digits, and underscores but cannot start with a digit.

Token Attributes: Tokens generated by the lexical analyzer often include attributes that provide additional information about the token. For example, an identifier token may include the actual name of the identifier as its attribute.

Error Handling: Lexical analyzers are responsible for detecting and reporting lexical errors, such as syntax errors or illegal character sequences. Error handling mechanisms are implemented to provide informative error messages to programmers.

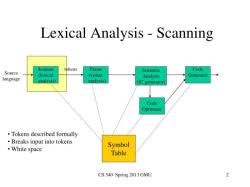

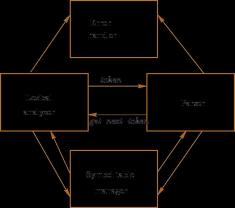

Symbol Table: In some compilers or interpreters, a symbol table is maintained by the lexical analyzer to keep track of identifiers and their attributes. This table is used by subsequent phases of the compilation process for name resolution and type checking.

Efficiency: Efficiency is crucial in lexical analysis because source code can be quite large. Lexical analyzers are designed to process the code efficiently, often using techniques like finite automata or efficient data structures to speed up token recognition.

Integration with Parsing: The output of the lexical analyzer is typically fed into the next phase of the compiler or interpreter, which is the parser. The parser analyzes the syntactic structure of the program based on the tokens provided by the lexer.

Reusable Components: Lexical analyzers are often designed to be reusable across different programming languages or dialects. This modularity allows the same lexical analyzer component to be used with multiple language grammars.

Code Generation: In some cases, the lexical analyzer may also be involved in code generation, especially in interpreted languages or scripting languages where the source code is executed directly without a separate compilation step.

In summary, a lexical analyzer is a critical component of the compilation or interpretation process that breaks down source code into tokens, enforces lexical rules, detects errors, and provides the foundation for subsequent phases of processing, such as parsing and code generation. It plays a fundamental role in translating human-readable source code into a format that a computer can understand and execute.

Exploring the Role and Function of a Lexical Analyzer

A lexical analyzer, also known as a lexer, is a component of a compiler that reads the source code and groups the characters into tokens. Tokens are the basic building blocks of a program, such as keywords, identifiers, operators, and punctuation symbols.

The lexical analyzer works by scanning the source code character by character and matching the characters to regular expressions. Regular expressions are patterns that can be used to match sequences of characters.

Once the lexical analyzer has identified a token, it passes the token to the parser. The parser is another component of the compiler that is responsible for analyzing the syntax of the program.

Understanding Lexical Analysis in Programming and Compiler Design

Lexical analysis is the first phase of compilation. It is responsible for breaking down the source code into smaller, more manageable pieces. This makes it easier for the parser to analyze the syntax of the program.

Lexical analysis is also used to identify errors in the source code. For example, if the lexical analyzer encounters an invalid character or an unknown keyword, it will generate an error.

Lexical analysis is an essential part of compiler design. It is responsible for converting the source code into a form that can be understood by the parser.

The Significance of Lexical Analyzers in Software Development

Lexical analyzers are significant in software development because they play a vital role in the compilation process. Without lexical analyzers, compilers would not be able to understand the source code and generate machine code.

Lexical analyzers are also used to develop other tools and utilities, such as text editors and code analyzers. Text editors use lexical analyzers to highlight syntax and to identify errors in code. Code analyzers use lexical analyzers to identify potential security vulnerabilities and to perform other types of code analysis.

Overall, lexical analyzers are essential tools for software development. They play a vital role in the compilation process and are used to develop other tools and utilities.

Here are some additional benefits of using lexical analyzers in software development:

- Improved code quality: Lexical analyzers can help to improve the quality of code by identifying errors in the source code. This can help to reduce the number of bugs in software applications.

- Increased productivity: Lexical analyzers can help to increase the productivity of software developers by automating the task of lexical analysis. This frees up developers to focus on other tasks, such as writing and testing code.

- Improved code readability: Lexical analyzers can help to improve the readability of code by highlighting syntax and by identifying errors in code. This can make it easier for developers to understand and maintain code.

If you are a software developer, I encourage you to learn more about lexical analyzers and how they can be used to improve your software development process.