What is primary task of a lexical analyzer?

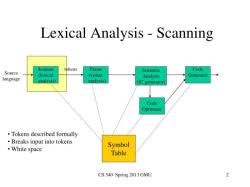

The primary task of a lexical analyzer, often referred to as a lexer or scanner, in programming is to read the source code of a program and break it down into a sequence of tokens. Tokens are the smallest units of a programming language, such as keywords, identifiers, operators, and constants. The primary responsibilities of a lexical analyzer include:

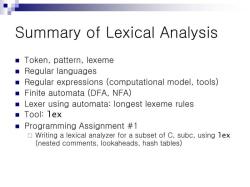

Tokenization: The lexer scans the source code character by character, identifying and categorizing sequences of characters into meaningful tokens. These tokens are building blocks that represent the fundamental elements of the programming language.

Removing Whitespace and Comments: The lexical analyzer typically discards irrelevant characters, such as whitespace (spaces, tabs, and line breaks) and comments (both single-line and multi-line), as these do not contribute to the structure of the program.

Error Detection: Lexical analyzers often check for and report lexical errors, such as misspelled keywords or symbols, which can help catch syntax errors early in the compilation process.

Generating a Token Stream: After identifying and categorizing tokens, the lexer generates a token stream, which is a sequence of tokens in the order they appear in the source code. This token stream is then passed on to the next stage of the compiler, the parser, which constructs the program's abstract syntax tree (AST).

Supporting Preprocessing Directives: In some programming languages, the lexical analyzer may handle preprocessing directives, such as include statements in C and C++ or conditional compilation directives. These directives are processed before the main tokenization phase.

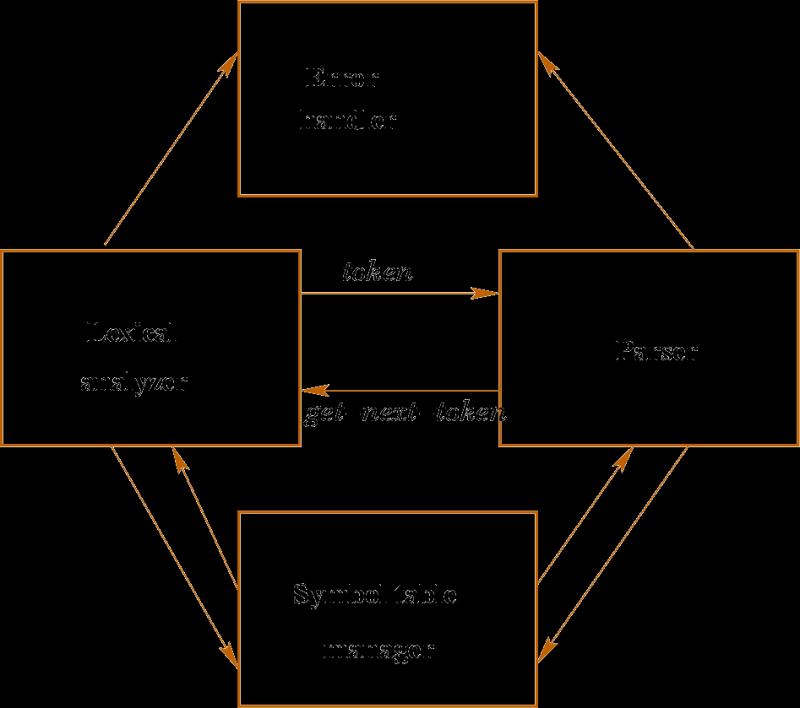

The output of the lexical analyzer, the token stream, serves as input to the subsequent phases of the compiler, including the parser, which checks the syntax of the program, and the semantic analyzer, which verifies the program's semantics and enforces language rules. The division of labor between the lexical analyzer and the parser allows for a more modular and efficient approach to compiling source code.

The Primary Task of a Lexical Analyzer: Function and Purpose

A lexical analyzer, also known as a scanner or tokenizer, is a crucial component of a compiler. It plays a fundamental role in the compilation process by transforming the input source code into a stream of meaningful tokens. These tokens are the basic building blocks of the program, representing individual words, symbols, and operators that the compiler can understand and process.

The primary task of a lexical analyzer is to break down the input source code into a sequence of tokens. This process involves:

Tokenization: Identifying and separating individual tokens from the input stream.

Lexical Analysis: Recognizing and classifying tokens based on their type, such as keywords, identifiers, operators, punctuation, and comments.

Attribute Gathering: Attaching relevant information to each token, such as the token's value or type.

Functions and Responsibilities of Lexical Analyzers

Lexical analyzers perform several essential functions within the compilation process:

Error Detection: Identifying and reporting lexical errors in the source code, such as misspelled keywords or invalid identifiers.

Symbol Table Construction: Creating a symbol table that stores information about the program's identifiers, such as their names, types, and values.

Preprocessor Support: Handling preprocessor directives and macros, which can modify or transform the source code before further processing.

Code Optimization: Identifying and removing unnecessary whitespace and comments, which can improve the efficiency of the compilation process.

Code Generation: Providing the compiler with a stream of tokens that it can use to generate machine code or intermediate code.

Lexical Analysis: Its Role in Compiler Design and Programming

Lexical analysis plays a pivotal role in compiler design and programming:

Compiler Design: Lexical analysis is the first stage of the compilation process, providing the foundation for subsequent stages such as syntax analysis and semantic analysis.

Programming Language Design: The design of a programming language involves defining the lexical rules that govern how the language's tokens are formed and interpreted.

Code Readability and Maintainability: Proper lexical analysis can improve the readability and maintainability of source code by ensuring consistent tokenization and error detection.

Compiler Efficiency: Efficient lexical analysis contributes to the overall efficiency of the compilation process.

Programming Tools: Lexical analysis is the basis for various programming tools, such as code editors and linters, which can provide syntax highlighting, error checking, and code formatting.