What is a Moral Machine?

The "Moral Machine" is an online platform and thought experiment created by researchers at the Massachusetts Institute of Technology (MIT) that aims to explore ethical dilemmas related to artificial intelligence (AI) and autonomous vehicles. It presents users with a series of scenarios in which an autonomous vehicle must make a life-or-death decision in a hypothetical moral dilemma.

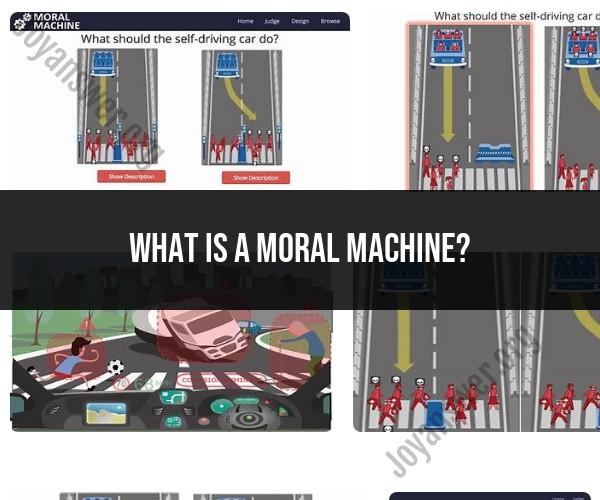

Here's how the Moral Machine typically works:

Scenario Presentation: Users are presented with a scenario where an autonomous vehicle is faced with a moral decision. For example, the vehicle may need to choose between two different courses of action that could result in different outcomes, such as saving the passengers inside the car or pedestrians outside the car.

Decision-Making: Users are then asked to make a decision by selecting one of the available options. They can choose who or what the autonomous vehicle should prioritize in the given situation.

Data Collection: The decisions made by users are collected and analyzed anonymously. The Moral Machine platform gathers data on how people from different backgrounds and cultures make moral choices in these situations.

Ethical Discussion: The platform also includes a discussion component where users can see how their choices compare to the choices made by others and engage in discussions about the ethical implications of these decisions.

The Moral Machine is designed to spark discussions about the ethical challenges that arise as AI and autonomous technologies become more prevalent in our society. It raises questions about how AI should be programmed to make decisions in situations where there are competing moral values and potential harm to humans and other beings.

By collecting and analyzing data from users worldwide, researchers hope to gain insights into cultural and societal variations in ethical preferences and to inform the development of ethical frameworks for AI and autonomous vehicles. It also encourages public engagement in the ethical debate surrounding AI technology.

Ethics and Technology: Exploring the Moral Machine

The Moral Machine is an online platform that allows people to make moral decisions in hypothetical scenarios involving self-driving cars. The platform has been used to collect data on how people from different cultures and backgrounds make moral decisions.

The Moral Machine raises a number of important ethical questions about the development and use of artificial intelligence. For example, how should self-driving cars be programmed to make moral decisions? Should they be programmed to prioritize the safety of the passengers or the safety of pedestrians?

The Moral Machine also raises questions about the role of humans in the development and use of AI. Who should be responsible for programming self-driving cars to make moral decisions? And how can we ensure that AI is used in a responsible and ethical way?

The Moral Dilemmas of AI: Insights from the Moral Machine

The Moral Machine has shown that there is a great deal of variation in how people make moral decisions in hypothetical scenarios involving self-driving cars. For example, some people believe that self-driving cars should always prioritize the safety of the passengers, even if this means sacrificing the safety of pedestrians. Others believe that self-driving cars should prioritize the safety of pedestrians, even if this means sacrificing the safety of the passengers.

The Moral Machine has also shown that people's moral decisions are influenced by a variety of factors, including their culture, their personal values, and the specific context of the scenario. For example, people are more likely to sacrifice the safety of pedestrians if they are in a hurry or if they believe that the pedestrians are behaving recklessly.

Machine Morality: Understanding the Role of the Moral Machine

The Moral Machine is a valuable tool for understanding the ethical challenges posed by AI. The platform has shown that there is no easy answer to the question of how self-driving cars should be programmed to make moral decisions.

The Moral Machine also highlights the importance of human involvement in the development and use of AI. Humans need to be involved in programming AI to make moral decisions, and humans need to be responsible for ensuring that AI is used in a responsible and ethical way.

Conclusion

The Moral Machine is a powerful tool for exploring the ethical challenges posed by AI. The platform has shown that there is no easy answer to the question of how self-driving cars should be programmed to make moral decisions. However, the Moral Machine has also shown that it is important to have a public conversation about these issues and to develop ethical guidelines for the development and use of AI.