What does diverge mean in calculus?

In calculus, the term "diverge" refers to the behavior of a sequence or series as it extends to infinity. When a sequence or series diverges, it means that its terms or partial sums do not approach a finite limit as you continue to add more terms or move further along in the sequence. In other words, the values become increasingly larger or exhibit oscillatory behavior without settling down to a specific number.

There are two primary types of divergence in calculus:

Divergence to Positive or Negative Infinity: A sequence or series is said to diverge to positive infinity if its terms or partial sums become arbitrarily large (i.e., they have no upper bound) as you progress through the sequence or add more terms. Similarly, a sequence or series is said to diverge to negative infinity if its terms or partial sums become increasingly negative without bound.

Mathematically, if for a sequence (a_n) or a series Σa_n:

- lim (n → ∞) a_n = +∞, it diverges to positive infinity.

- lim (n → ∞) a_n = -∞, it diverges to negative infinity.

Oscillatory Divergence: In some cases, a sequence or series may not approach infinity but instead oscillate or exhibit erratic behavior as you progress through the terms. This behavior is still considered a form of divergence because there is no clear limit that the sequence or series approaches.

Divergence is an important concept in calculus because it helps determine whether a given sequence or series converges (approaches a finite limit) or diverges (does not approach a finite limit). Convergent sequences or series are often used to define mathematical concepts such as limits and integrals, making them fundamental in various areas of mathematics and science.

Demystifying Divergence in Calculus: What Does It Mean?

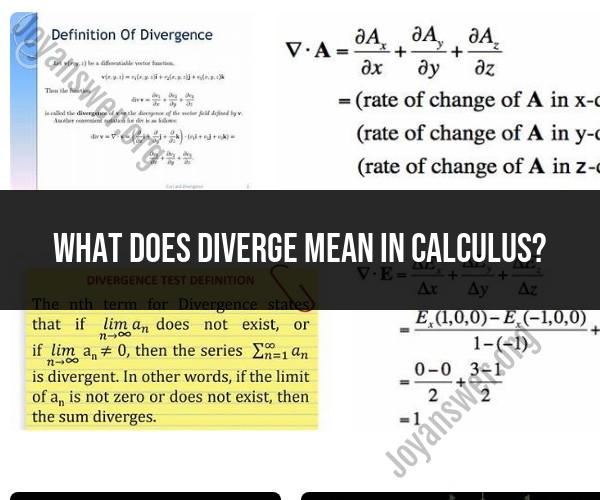

Divergence in calculus is a concept that describes how much a vector field is spreading out or contracting at a given point. It is a measure of the net outward flux of the vector field from an infinitesimal volume around that point.

A vector field is a function that assigns a vector to each point in space. It can be used to represent many different physical phenomena, such as fluid flow, electric fields, and magnetic fields.

The divergence of a vector field can be positive, negative, or zero. A positive divergence indicates that the vector field is spreading out at that point. A negative divergence indicates that the vector field is contracting at that point. A zero divergence indicates that the vector field is neither spreading out nor contracting at that point.

The Concept of Divergence in Calculus: Explained Simply

One way to think about divergence is to imagine a tiny cube in space. The divergence of a vector field at a point tells us how much the vector field is flowing out of the cube, per unit volume.

If the divergence is positive, then more vector field is flowing out of the cube than into it. This means that the vector field is spreading out at that point.

If the divergence is negative, then more vector field is flowing into the cube than out of it. This means that the vector field is contracting at that point.

If the divergence is zero, then the amount of vector field flowing into the cube is equal to the amount of vector field flowing out of it. This means that the vector field is neither spreading out nor contracting at that point.

3. Calculus and Convergence: Understanding the Term "Diverge"

The term "diverge" is used in calculus to describe a series or sequence that does not approach a finite limit. For example, the following infinite series diverges:

1 + 2 + 3 + 4 + ...

This is because the sum of the terms of the series grows larger and larger without approaching a limit.

The term "diverge" is also used to describe a vector field whose divergence is not zero at all points. For example, the following vector field diverges at the origin:

F(x, y) = (x, y) / (x^2 + y^2)

This is because the divergence of F at the origin is infinite.

Conclusion

Divergence is a fundamental concept in calculus with many applications in physics and engineering. It can be used to describe the spreading out or contracting of vector fields, as well as the behavior of infinite series and sequences.