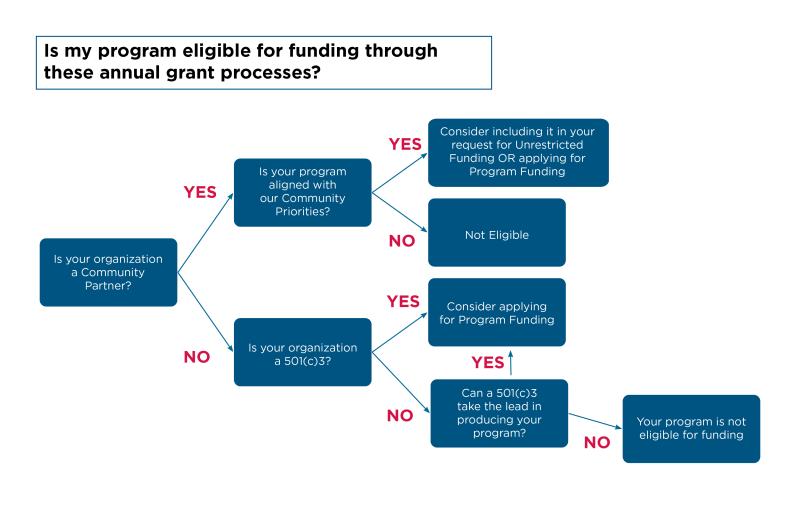

What is a decision tree?

A decision tree is a powerful and widely used analytical tool in the fields of statistics, machine learning, and data science. It is a visual representation of a decision-making process that resembles a tree, with branches representing decision points and leaves representing outcomes. Decision trees are employed for classification and regression tasks, helping to make decisions or predictions based on input data.

Here's a breakdown of the key components and concepts associated with decision trees:

Nodes:

- A decision tree consists of nodes, which represent decision points or steps in the decision-making process. The tree starts with a root node and branches out into internal nodes and leaf nodes.

Root Node:

- The root node is the initial decision point from which the tree branches out. It represents the first decision or test based on a specific feature or attribute.

Internal Nodes:

- Internal nodes represent subsequent decision points in the tree. Each internal node corresponds to a test or condition based on a particular feature.

Branches:

- Branches connect nodes and represent the possible outcomes of a decision or test. The branches lead to other nodes or leaves.

Leaves (Terminal Nodes):

- Leaves are the endpoints of the decision tree. They represent the final outcomes or predictions based on the decisions made at the internal nodes.

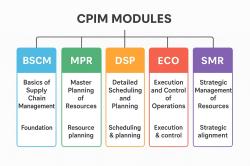

Features/Attributes:

- Features or attributes are the characteristics of the data used to make decisions at each internal node. These could be quantitative or categorical variables.

Splitting:

- Splitting refers to the process of dividing a node into two or more child nodes based on a specific feature and a threshold (for numerical features) or categories (for categorical features).

Decision Rules:

- Decision rules are determined by the conditions at each internal node. These rules guide the traversal of the tree until a leaf node is reached.

Prediction/Classification:

- Decision trees are used for both classification and regression tasks. In classification, each leaf node corresponds to a class label, while in regression, the leaf nodes provide a continuous prediction.

Entropy and Information Gain (Optional):

- In machine learning applications, decision trees may use metrics such as entropy and information gain to determine the best features for splitting nodes, optimizing the decision-making process.

Decision trees are versatile and easy to understand, making them valuable for both analytical and interpretative purposes. They are often used in various domains, including business, finance, healthcare, and more, for tasks such as customer segmentation, risk assessment, and predictive modeling. While decision trees are prone to overfitting, techniques such as pruning and ensemble methods (e.g., Random Forests) are employed to enhance their robustness and generalization capabilities.

Sure, here is a comprehensive explanation of decision trees, including their definition, usage, and creation process:

What is a decision tree and how does it work?

A decision tree is a flowchart-like structure that uses a series of conditional control statements to arrive at a conclusion or decision. It is a supervised learning algorithm that can be used for both classification and regression tasks.

How decision trees work:

Data input: The decision tree receives data consisting of input variables (features) and a target variable (label).

Tree construction: The algorithm recursively partitions the data into smaller subsets based on the values of the input variables.

Decision nodes: At each decision node, the algorithm splits the data based on a specific input variable and its corresponding value.

Branching: Each split creates new branches, leading to further subsets of the data.

Leaf nodes: The process continues until the data is no longer divisible, and leaf nodes are reached.

Prediction: Leaf nodes contain the predictions or decisions based on the path taken through the tree.

When should you use a decision tree?

Decision trees are versatile algorithms suitable for various applications, including:

Classification: Predicting a discrete outcome or category, such as identifying spam or classifying emails.

Regression: Predicting a continuous numerical value, such as forecasting sales or estimating house prices.

Exploratory data analysis: Uncovering patterns, relationships, and trends within data sets.

Reasons to use decision trees:

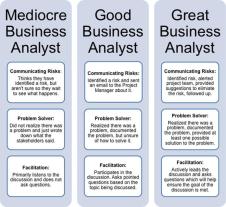

Easy to interpret: The tree structure provides a clear visual representation of the decision-making process.

Robust to outliers: Decision trees are less sensitive to outliers and missing values compared to other algorithms.

No feature scaling: Decision trees do not require feature scaling, making them simpler to implement.

Handle non-linear relationships: Decision trees can capture non-linear relationships between features and the target variable.

How to create a decision tree

Creating a decision tree involves the following steps:

Data preparation: Clean and prepare the data, handling missing values and encoding categorical variables if necessary.

Algorithm selection: Choose an appropriate decision tree algorithm, such as ID3, C4.5, or CART.

Tree construction: Train the decision tree using the selected algorithm and the prepared data.

Pruning: Optional step to simplify the tree by removing unnecessary branches to prevent overfitting.

Evaluation: Evaluate the performance of the decision tree using metrics like accuracy (classification) or mean squared error (regression).

Interpretation: Analyze the decision tree to understand the decision-making process and identify important features.